CacheCade performance is much more dependent on SSD read performance than on SSD write performance, so the performance depends upon the size of the SSDs and the total size of the data hot spots. If there simply aren’t many hot spots on the array being accelerated, you will not gain as large of a performance gain.

Once again, in our testing of the ultra-powerful 9265-8i we are running into the limitations of our drives. Our caching results on the following pages take a bit longer to kick in than with the results that LSI and Demartek have recorded. Part of this is that we are using 4 lower powered ‘green’ hard drives that are 5400 rpm. This gives us a lower “base” level of IOPS for the testing, so the algorithms might take slightly longer to identify the ‘Hot” data.

The LSI arrays used for their internal testing generate 3200 IOPS with 12 Seagate 15,000rpm 6G SAS HDDs. Our RAID array only generates 200 IOPS in the single read zone scenario, and 500 in the multiple zone reading scenarios, so there is a bit of a difference there. Also the performance of the base array is going to be especially important when there is a large data hot spot, such as the 50 GB test file that we are using here. For applications with smaller hot data regions, the ramp up time will be reduced, even when the base HDD volume performance is slow.

Another limitation is the write speed of the MLC we are using, versus the SLC that was used in LSI internal testing. Once the data has been identified for caching, it has to be written to the SSDs before being used to speed things up.

MLC is a multi-level cell NAND flash architecture for the internal NAND components of the SSD. The C300 drives that we used for this testing are MLC drives. MLC, in the current generation of SSD devices, is slower than SLC by a large margin, especially in regards to small file random writing. MLC is the consumer variant of NAND that is used by your average non-enterprise user, and enjoys a much lower price structure than its counterpart.

SLC is a single-layer cell NAND flash architecture for the internal NAND components of the SSD. SLC devices are much faster in some areas and much more resilient. A particular strength of SLC is the small random write performance. SLC is the preferred type of device used in the enterprise sector as it is much more durable and faster in many aspects than MLC drives. SLC drives are, however, very cost prohibitive for a normal user. The prices of SLC drives have relegated them to the enterprise sector almost exclusively.

Here is a tidbit of information from one of their documents that is particularly helpful to illustrate the difference:

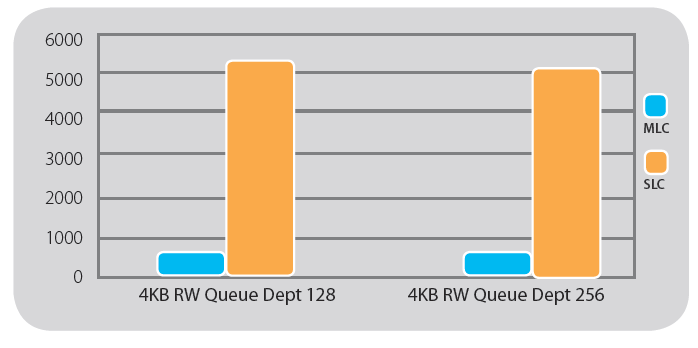

Note that CacheCade and FastPath performance depends on SSD performance. SSD performance depends on make, model, firmware, technology, specifications, and number of SSDs used. It is important to select the right SSD to achieve customers performance requirements. The chart below displays IOMeter benchmark results on an MLC and an SLC SSD. The single drives were configured as RAID 0 arrays. 4KB random writes were performed on the SSDs over two hours.

As you can see here there is a huge difference in the random write performance capability of the drives that we are using in our testing as opposed to the types of drives that are used in enterprise applications. Due to cost constraints we are unfortunately not able to provide write testing with the SLC drives. In the future it will be nice to test with the future generations of MLC drives. As technology progresses, more and more functionality is being gained with MLC, mainly due to improved interleaving, wear leveling algorithms, Garbage Collection features, and improved ECC.

As a side note, Intels next line of E series (enterprise) SSDs will be MLC for the first time. Previous generations were SLC exclusively. It will be very interesting to see if this next generation of MLC devices can produce at a level even close to todays SLC drives.

The purpose of explaining this is to illustrate that the relatively “slow” ramp up time may be, in part, a limitation of the devices that we have attached, and we are not testing at the full capabilities of this RAID controller. There is certainly some much faster ramp up times demonstrated with SLC devices with larger and faster underlying arrays.

NEXT: Test Bench and Protocol

Page 1- Introduction

Page 2- Nuts and Bolts

Page 3- Maximizing Benefits

Page 4- MLC vs SLC

Page 5- Test Bench and Protocol

Page 6- Single Zone Read

Page 7- Hot-Warm-Cool-Cold

Page 8- Conclusion

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |