One of the major improvements with the CacheCade Pro version is that write data can also be cached. The write caching is a tremendous accelerator for any HDD array, be it large or small. One of the major weaknesses of any storage medium is writing, especially in mixed read/write scenarios. An unfortunate side-effect of writing is that it slows down reading. For instance, if a large file was being read off of the array, and then a small write was issued simultaneously to the array, the speed of the large read would suffer drastically as the latency is affected.

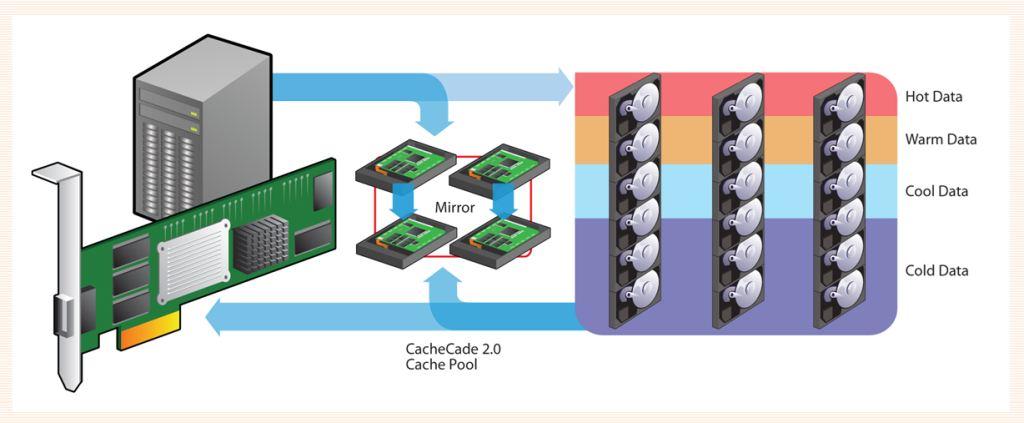

The concept of caching the writes will be to keep the writes on the SSD layer until such a time that the write can be passed along to the HDD array as unobtrusively as possible. Not all write data will be cached. The write data is also going to be monitored for “Hot” status, and cached appropriately. This allows for total optimization of HDD I/O traffic.

An area that is sure to benefit from this by a large margin is in raidsets with parity. A drawback of these types of RAID is that they can be very slow at write operations. The main cause of this is that for each write operation they actually have to read, then modify, then write, the data. This can cause significant performance problems. So RAID 5 and 6 and other raidsets with data security or duplication will be able to handle the more demanding process of read-modify-write much easier. This can lessen some of the performance disadvantages with RAID 5 and 6, allowing more performance at the back end of the storage system. This is made possible because the majority of the application IO requests from the base HDD volume are being handled by the SSDs where the data is being cached.

When caching the write data, data redundancy and integrity becomes a very large concern. The release of CacheCade Pro will also bring with it the inclusion of redundant raidsets for use with the caching volume. The SSD volume will be able to be configured in a RAID 0 for optimum performance when data availability isn’t as important as raw speed (ie. if a SSD fails). RAID 1 and RAID 10 are also available for ultimate data protection. In a follow-on release, RAID 5 will also be introduced for the SSD caching volume.

Upon any type of failure, be it a power failure or otherwise, the controller will copy the write data onto the base array upon power up. This is a considerable advantage of using SSDs with their non-volatile flash. When deleting SSD volumes, the controller will first flush all cached write data to the base array.

The overall goal of the CacheCade Pro is to meld an SSD (or several) and an HDD array into one cohesive unit. The key is to utilize the strengths of each solution and not just the SSD. Intelligent caching improvements in the code, compared to the previous generation of CacheCade, have begun to leverage the strengths of both the HDD array and the SSD cache appropriately. Not all hot data should be cached in all scenarios. There are certain scenarios where the HDD array will actually perform either well enough, or better than the SSD, so caching isn’t necessary. It is important for the algorithm to be able to detect these patterns, so that the amount of data that is cached can be minimized in amount, yet maximized in performance overall. This type of optimization is going to be much more efficient as it will result in less wear to the caching SSDs, and also incur less overhead. A nice benefit of this is that it can create additional room for caching.

ANSWERING CONCERNS

SSDs are known for their reliability and performance, especially the enterprise class variants, however, users have to keep in mind that they DO have a lifetime much like any other device. With extremely high write loads over an extended period of time, even enterprise SLC drives will eventually wear out. RAID-1 and RAID-10 are important because they provide non-stop data availability, even in the event of an SSD failure. If the SSD were to fail, simply replace the SSD and the rebuild will begin automatically. If the user were to have an assigned hot spare, the rebuild would begin automatically with no user intervention. This provides yet another layer of data security.

CacheCade Pro supports to 512 GB of cache, and LSI plans to expand support up to 2TB in total in a future product update. You can use up to 32 SSD devices if you like, and performance does scale very well with the addition of more devices, as we will show shortly. A nice addition with this version is the ability to selectively choose which Base HDD Arrays (one or more) are assigned to the CacheCade SSD Volume. There is definitely a lot of flexibility there for the administrator to fully optimize the system.

One could gain the benefit of over-provisioning, thus multiplying the SSD endurance, simply by using several large capacity SSDs. Since there is a limitation to the amount of SSD space that can be used by the controller, any excess capacity of the SSDs will simply be left unformatted and unused, handily becoming a nice bit of over-provisioning.

A commonly used scenario would be several small capacity disks that would allow excellent performance scaling, and doing it with small cheaper devices. One of the big takeaways of this software is that ultimately you are looking to leverage the speed of SSDs with the capacity of HDD, as cheaply as possible. This allows one server to essentially expand to do the work of several.

NEXT: Exploring TCO

~ Introduction ~ Basic Concepts and Application ~

~ Enter Write Caching ~ Exploring TCO ~ Test Bench and Protocol ~

~ Single Zone Results ~ Overlapped Region Results ~

~ Real World Results and Conclusion ~

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |