INSIDE THE TOSHIBA PX02SSF040 CONTINUED

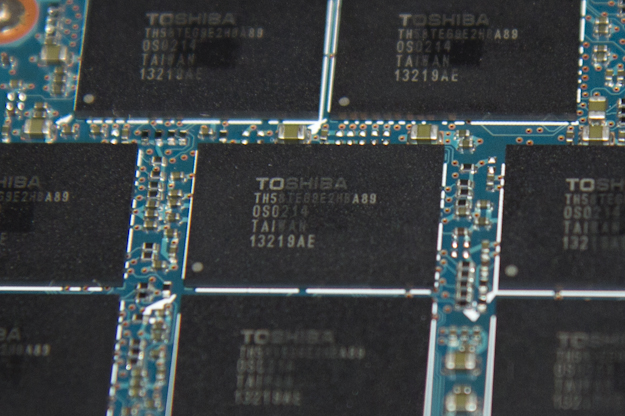

Things get more interesting when we focus on the NAND. Each 24nm eMLC NAND package consists of 64GB. When spread across 16 packages it brings the total raw amount to 1024GB. For those following along at home, that is 1024GB for a 400GB SSD. Normally, 400GB enterprise SSDs have only 512GB of raw NAND. With the PX02SS, we found double that amount.

This finding actually clears up a lot of odd things that were stuck in the back of our heads. First of all, the PCB and component layout is almost identical to the PX02SM. Also, the PX02SS line consists of capacities that are exactly half of what the PX02SM offers. We thought it was odd that there was such a large difference in write endurance between two drives (PX02SS and PX02SM) considering they both used the same 24nm eMLC NAND. This would also explain their close, but not exact performance. While random 4KB reads are identical, 4KB writes are much higher on the PX02SS. This is a common side effect of increased overprovisioning.

Beyond random write performance, the 156% overprovisioning gives you a ton of write endurance. While the 400GB PX02SM is rated for 7.3PB of endurance, the PX02SS is rated at 21.9PB. This is also higher than the 18.3PB of endurance specified for the SSD800MM. If you move up to the 800GB model, the PX02SS is rated for 43.8PB of endurance!

Finally, we have the Toshiba TC58NC9036GTC controller. As you might have guessed, it is the same controller used in the PX02SM. We don’t have a lot of information other than it was developed with Marvell.

TESTING METHODOLOGY

In testing the Toshiba PX02SS, along with all enterprise drives, we focus on long term stability. In doing so, we stress products not only to their maximum rates, but also with workloads suited to enterprise environments.

In testing the Toshiba PX02SS, along with all enterprise drives, we focus on long term stability. In doing so, we stress products not only to their maximum rates, but also with workloads suited to enterprise environments.

We use many off-the-shelf tests to determine performance, but we also have specialized tests to explore specific behaviors we encounter. With enterprise drives, you will see that we do not focus on many consumer level use-cases.

Our hope is that we present tangible results that provide relevant information to the buying public.

As with all of our 12Gbps SAS reviews, we used the LSI SAS 9300-8e HBA.

LATENCY

To specifically measure latency, we use a series of 512b, 4K, and 8K measurements. At each block size, latency is measured for 100% read, 65% read/35% write, and 100% write/0% read mixes.

The average latency for the PX02SS is very good. If it wasn’t for the HGST SSD800MM, it would be the best results that we have ever seen. While the 100% write latency trailed the HGST drive by a considerable amount, the 100% read and 65% read tests were much closer. The PX02SS even pulled out a win at 8KB transfer sizes at those workloads.

Maximum latency showed similar results. Write latency was nearly double that of the HGST drive. At a 65% read workload, both drives delivered similar results. For 100% reads, the Toshiba PX02SS pulled out a commanding win. By contrast, the SSD800MM had maximum latency between 6.5 and 13ms.

ADVANCED WRITE TESTING

As we talked about in our Micron P400m SSD Review, SSDs have different performance states. Since the Toshiba PX02SS is an enterprise SSD, we will focus on steady state performance.

With the following tests, we stressed the drive using random 4KB write workloads across the entire span for at least 24 hours. This is more than enough to achieve steady state. The following graph is showing the latency and IOPS across an 11 hour span with 1 minute averages.

Across 11hrs, the 1 minute averages were all within 1500 IOPS, which is an excellent result. Unfortunately, it was not quite as good as the SSD800MM, which had a distribution that was less than 800 IOPS.

Zooming in on the last hour with 1 second averages shows that there is a large difference between the Toshiba and HGST drives. While the HGST had a range of 5K IOPS during steady-state, the Toshiba drive was closer to 30K IOPS. Now, the vast majority of data points for the PX02SS were at or above its specification, but there was less consistency than what we expected.

Even though the results are better than almost every 6Gbps SATA/SAS SSD that we have tested, it was only marginally better and far from the 65-70K IOPS we got from the SSD800MM.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

156% op!?!? Man…I’d have been impressed with just 50…lol Glad to see Tosh keeping their game up re: the high end…hope that bleeds over into the consumer market eventually.

Check out your OP calculations!!

1024 GiB of NAND physically installed.

400 GB of user space -> 372.53 GiB

OP = 1 – (372.53 / 1024) = 0.6362

—> 63.62% of the available NAND space is used as OP

It is a 400GB SSD. There is 1024GB (16×64) of memory. Let’s stay away from mixing GiB abd GB and leave it as a very simple calculation which has been accepted by the industry for some time now. Thanks ahead!

This is like saying that [imperial] miles are [nautical] miles, even though in different contexts they mean different things. Whenever the chance of mixing them up arises, you have to clarify what you’re talking about, otherwise you only create confusion and room for error when calculations are involved, like in this case.

We appreciate your comments and believe they display the clarification well. Tx!

Responding just to your point of OP always being calculated on the total amount of NAND, that is not true, it is the amount provisioned over the usable space. So it is (1024-400)/400=1.56 or (1024/400)-1=1.56. I do appreciate you keeping me on my toes 🙂

https://renice-tech.com/documents/Definition_of_Overprovisioning.pdf

Sandforce might be calculating it that way, but it doesn’t make much sense logically speaking although the calculation is mathematically correct. On most (won’t say all because you never know) SSDs the OP is increased/added by taking off user capacity from the installed flash memory (which usually is in “even”, divisible amounts), not by adding flash memory to a preset capacity. Thin difference.