MULTI-DRIVE PERFORMANCE, cont.

Finally, we get to aggregate throughput. We can’t saturate the 9207-8i with IOPS, but we can harness the throughput of 8 drives.

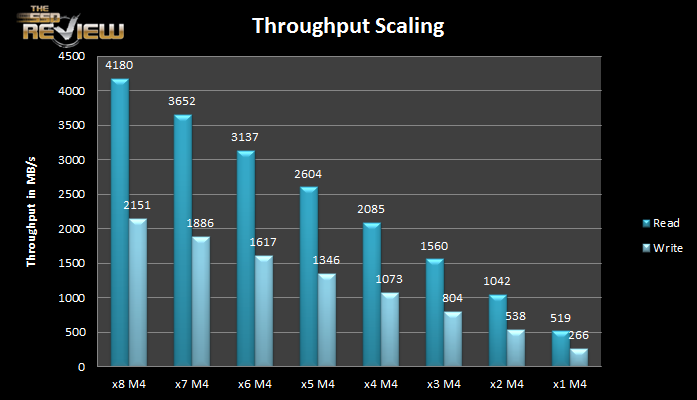

So, as it turns out, we can make the most of 8 Crucial M4 256GB SATA III SSDs. Read and write throughput scales beautifully. 2.15GB/s for sequential writes is exactly 8x the seq. write speed of one M4, while 4.18GB/s is exactly 8x the seq. read speed. Had this been a PCIe 2 device, read scaling would have stopped with five drives or so, being that overhead limits theoretical bandwidth by 20%. The M4s only write half as fast as they read, so they come in under the 3GB/s limit of PCIe 2, but scale well nonetheless.

So, as it turns out, we can make the most of 8 Crucial M4 256GB SATA III SSDs. Read and write throughput scales beautifully. 2.15GB/s for sequential writes is exactly 8x the seq. write speed of one M4, while 4.18GB/s is exactly 8x the seq. read speed. Had this been a PCIe 2 device, read scaling would have stopped with five drives or so, being that overhead limits theoretical bandwidth by 20%. The M4s only write half as fast as they read, so they come in under the 3GB/s limit of PCIe 2, but scale well nonetheless.

The IOmeter shot shows 1MB sequential writes pushing 4.1GB/s through the controller. Excellent stuff.

The IOmeter shot shows 1MB sequential writes pushing 4.1GB/s through the controller. Excellent stuff.

CONCLUSION

We’re just scratching the surface with these basic tests. But we wanted to know how much data we could push through the card, and we got our answer. With 8 consumer drives we were able to get almost 4200MB/s read throughput. The octet of Crucials managed just shy of 370,000 write IOPS too.

Naturally, when new gear shows up, we can’t resist the urge to try it out. The 9207-8i is going into our Enterprise Test Bench for more testing, but on it’s way we had to stop and give it a whirl with a few SSDs. The Crucial M4 may itself be supplanted by Micron’s next client drive, but aggressive pricing and considerable speed will make it a mainstay for the foreseeable future. By putting the two products together, we found some excellent performance and perhaps a hint of things to come.

The LSI SAS 9207 HBA is still young, so new developments with respect to drivers and firmware could change performance as it matures. Given LSI’s track record, the 9207-8i has a prosperous future. In contrast, given that 12Gbps products are going to be on the market sooner rather than later, the 9207 could just be a short-term stepping stone to the PCIe 3 12Gbps HBAs of the not-too-distant future. Predicting the future is never easy though, so it’s best not to make any firm prognostications. We’ll take advancements anywhere we can find them, and LSI’s Gen3 HBAs are certainly great advancements if you have the hardware to make the most of it.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Very impressive results. Nice to see the controller scales so beautifully. Was the M4 your first choice of SSD to test with? Do you think it’s a better choice for this use-case than the Vertex 4 or 830?

Great article. Typo though: “The 9702-8i is going into our Enterprise Test Bench for more testing,”–>”The 9207-8i is going into our Enterprise Test Bench for more testing,”

Thanks for the support and FIXED!

Excellent performance!

I will need to build a ssd raid 0 array arround this HBA to work with my ramdisk ISOs (55Gb). What are the better drives to work with that kind of data?

Thanks in advance and great review!

Thinking about using this in a project. How did you setup your RAID? the 9207 doesn’t support raid out of the box. Did you flash the firmware or just setup software RAID?

Test it with i540 240GB!

Christopher, what method did you use to create a RAID-0 array? Was this done using Windows Striping? (I am guessing that based on the fact that you’ve used IT firmware, which causes 8 drives to show up as JBOD in the disk manager)

Originally, the article was supposed to include IR mode results along side the JBOD numbers, but LSI’s IR firmware wasn’t released until some time after the original date of publication. Software RAID through Windows and Linux was unpredictable too, so we chose to focus on JBOD to show what the new RoC could do on 8 PCIe Gen 3 lanes. In the intervening months, we did experiment with IR mode, but found performance to be quite similar to software-based RAID. It’s something we may return to in a future article, but needless to say, you won’t get the most out of several fast SSDs with a 9207-8i flashed to IR mode.

I had a chance to try SoftRAID0 on IT firmware, RAID0 with IR firmware, and 9286-8i RAID0, all with Intel 520 480GB SSD. All configurations are speed-limited at 5 drives since I am rinning PCIe 2.0 x8. Time for a new motherboard, I guess…

Great review ! Very nice to see suvh great info on this subject.

BTW does this HBA support Trim ?

There’s a clarification that needs to be made in this article.

The theoretical raw bit rate of PCIe 2.0 is around 4GB/s. PCIe is a packet-based store-and-forward protocol, so there’s packet overhead that limits the theoretical data transfer rate.

At stock settings, this overhead is 20%. However, one can increase the size of PCIe packets to decrease this overhead significantly (to around ~3-5%) in BIOS.

I know this because I’ve raided eight 128GB Samsung 840 Pro with the LSI MegaRAID 9271-8iCC on a PCIe 2.0 motherboard, and I’ve hit this limit on sequential reads. In order to get around it, I raised the PCIe packet size, but doing so increases latency and may cause stuttering issues with some GPUs if raised too high.

Could you provide the settings you uses for the raid strip? i.e Strip Size,, Read Policy,Write Policy, IO etc. I just purchased this card and have been playing with configures to get an optimum result.

thanks!

Seems like most people want details of how the drives were configured so they can either try to do the tests themselves or just gain from the added transparency.