THE SSD REVIEW ENTERPRISE TEST BENCH

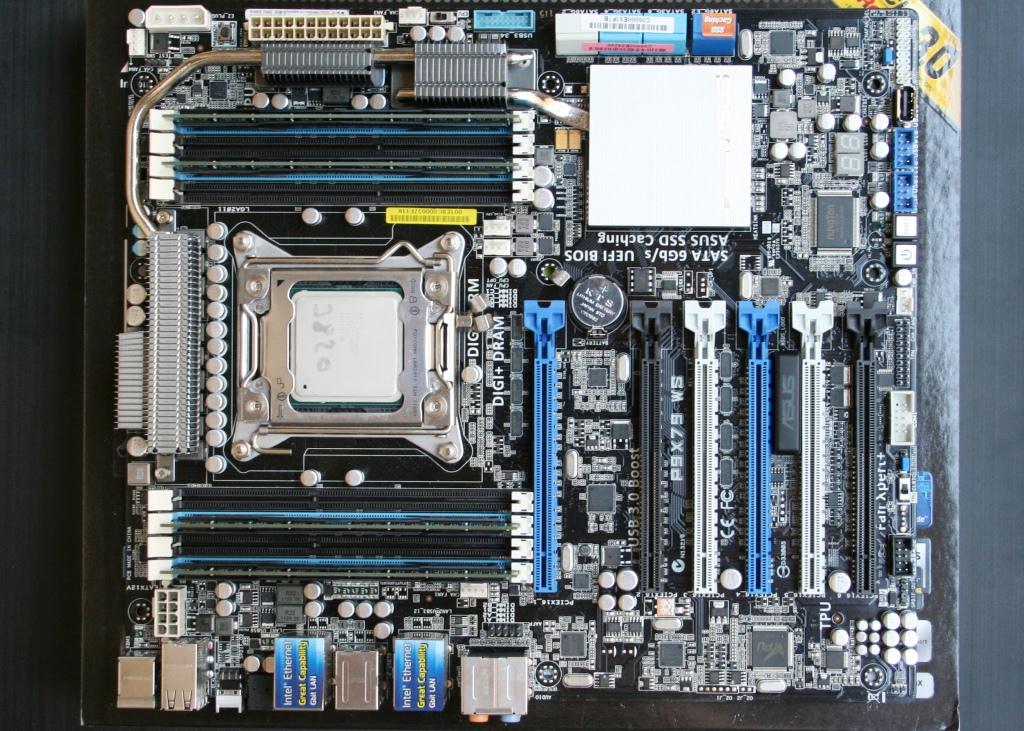

Riding shotgun on this quick performance analysis is the Asus P9X79 WS workstation-class Patsburg motherboard. The Asus P9X79 WS (the WS is the workstation variant) boasts PCIe 3.0 support for it’s 6 physical PCIe x16 slots. Furthermore, Asus touts “server-grade” RAID card compatibility on the WS, something that will definitely come in handy when dealing PCIe-based storage products. With a bevy of workstation features and wicked overclocking, the WS should fit the bill nicely.

Riding shotgun on this quick performance analysis is the Asus P9X79 WS workstation-class Patsburg motherboard. The Asus P9X79 WS (the WS is the workstation variant) boasts PCIe 3.0 support for it’s 6 physical PCIe x16 slots. Furthermore, Asus touts “server-grade” RAID card compatibility on the WS, something that will definitely come in handy when dealing PCIe-based storage products. With a bevy of workstation features and wicked overclocking, the WS should fit the bill nicely.

Each of the PCIe 3.0 x16 slots help to leverage X79’s 40 lanes of PCIe connectivity. The two white slots are electrically x4, but physically x16. The other four slots (black and blue) operate in 16x/16x with two slots, 16x/8x/8x with three, or x8/x8/x8/x8 with all four slots populated . If you need some multi GPU action, this is probably the board you want. While that’s probably not on this particular sample’s agenda, it’s nice to know you could. 40 lanes of PCIe 3 bandwidth is definitely a lot.

Each of the PCIe 3.0 x16 slots help to leverage X79’s 40 lanes of PCIe connectivity. The two white slots are electrically x4, but physically x16. The other four slots (black and blue) operate in 16x/16x with two slots, 16x/8x/8x with three, or x8/x8/x8/x8 with all four slots populated . If you need some multi GPU action, this is probably the board you want. While that’s probably not on this particular sample’s agenda, it’s nice to know you could. 40 lanes of PCIe 3 bandwidth is definitely a lot.

The eight DIMM slots surround the 2011 socket. The Core i7-3820 quad core is matched with a quad channel 1600MHz 32GB kit of Crucial Ballistix Sport. All that RAM is handy to have when testing RAM caching or RAM drives, and running multiple virtual machines is difficult with only 16GB.

The eight DIMM slots surround the 2011 socket. The Core i7-3820 quad core is matched with a quad channel 1600MHz 32GB kit of Crucial Ballistix Sport. All that RAM is handy to have when testing RAM caching or RAM drives, and running multiple virtual machines is difficult with only 16GB.

Where Sandy Bridge really increased dual channel memory bandwidth over it’s predecessors, Sandy Bridge-E takes the ball and runs with it. Four channels of DDR3 can more than double SB’s dual channel numbers, and when memory bandwidth is important, that’s going to make a huge difference.

System memory consists of four modules of Crucial Ballistix Sport DDR3-1600 MHz memory for a total of 32GB onboard memory. As well, the X79 obviously needs a discrete GPU. To that end, we’re using an Asus nVidia GTX 560 Ti Direct CU II. It’s quiet at idle and cool on load, two properties we highly prize.

System memory consists of four modules of Crucial Ballistix Sport DDR3-1600 MHz memory for a total of 32GB onboard memory. As well, the X79 obviously needs a discrete GPU. To that end, we’re using an Asus nVidia GTX 560 Ti Direct CU II. It’s quiet at idle and cool on load, two properties we highly prize.

We would like to thank LSI, ASUS, Intel, and Crucial

We would like to thank LSI, ASUS, Intel, and Crucial![]() , for sponsoring our Enterprise Test Bench as we are certain it will be put to good use in coming days.

, for sponsoring our Enterprise Test Bench as we are certain it will be put to good use in coming days.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Very impressive results. Nice to see the controller scales so beautifully. Was the M4 your first choice of SSD to test with? Do you think it’s a better choice for this use-case than the Vertex 4 or 830?

Great article. Typo though: “The 9702-8i is going into our Enterprise Test Bench for more testing,”–>”The 9207-8i is going into our Enterprise Test Bench for more testing,”

Thanks for the support and FIXED!

Excellent performance!

I will need to build a ssd raid 0 array arround this HBA to work with my ramdisk ISOs (55Gb). What are the better drives to work with that kind of data?

Thanks in advance and great review!

Thinking about using this in a project. How did you setup your RAID? the 9207 doesn’t support raid out of the box. Did you flash the firmware or just setup software RAID?

Test it with i540 240GB!

Christopher, what method did you use to create a RAID-0 array? Was this done using Windows Striping? (I am guessing that based on the fact that you’ve used IT firmware, which causes 8 drives to show up as JBOD in the disk manager)

Originally, the article was supposed to include IR mode results along side the JBOD numbers, but LSI’s IR firmware wasn’t released until some time after the original date of publication. Software RAID through Windows and Linux was unpredictable too, so we chose to focus on JBOD to show what the new RoC could do on 8 PCIe Gen 3 lanes. In the intervening months, we did experiment with IR mode, but found performance to be quite similar to software-based RAID. It’s something we may return to in a future article, but needless to say, you won’t get the most out of several fast SSDs with a 9207-8i flashed to IR mode.

I had a chance to try SoftRAID0 on IT firmware, RAID0 with IR firmware, and 9286-8i RAID0, all with Intel 520 480GB SSD. All configurations are speed-limited at 5 drives since I am rinning PCIe 2.0 x8. Time for a new motherboard, I guess…

Great review ! Very nice to see suvh great info on this subject.

BTW does this HBA support Trim ?

There’s a clarification that needs to be made in this article.

The theoretical raw bit rate of PCIe 2.0 is around 4GB/s. PCIe is a packet-based store-and-forward protocol, so there’s packet overhead that limits the theoretical data transfer rate.

At stock settings, this overhead is 20%. However, one can increase the size of PCIe packets to decrease this overhead significantly (to around ~3-5%) in BIOS.

I know this because I’ve raided eight 128GB Samsung 840 Pro with the LSI MegaRAID 9271-8iCC on a PCIe 2.0 motherboard, and I’ve hit this limit on sequential reads. In order to get around it, I raised the PCIe packet size, but doing so increases latency and may cause stuttering issues with some GPUs if raised too high.

Could you provide the settings you uses for the raid strip? i.e Strip Size,, Read Policy,Write Policy, IO etc. I just purchased this card and have been playing with configures to get an optimum result.

thanks!

Seems like most people want details of how the drives were configured so they can either try to do the tests themselves or just gain from the added transparency.