TESTING METHODOLOGY

Our complete testing methodology is described in our Solid State Storage Enterprise Test Protocol.

Our complete testing methodology is described in our Solid State Storage Enterprise Test Protocol.

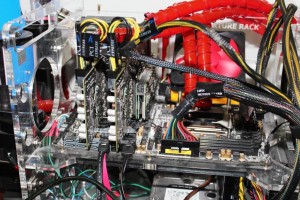

We utilize the X79 Patsburg chipset in tandem with the Windows Server 2008 R2 operating system.

Software such as Iometer allows us to test this drive with a minimum of host processing limitations.

We are focused on providing accurate measurements of the SSS itself, with no interference from performance-bound limitations introduced from other types of software and hardware that can be used. Different software-based approaches can be performance inhibited by many different aspects of the host systems CPU, RAM, and chipset limitations.

SNIA STANDARDS

We utilize several key considerations of the industry-accepted SNIA Specification for our testing. The performance of enterprise SSDs has to be conducted at steady state levels. This can be accomplished only by placing the drive under a very heavy workload over an extended period of time. Simply writing the capacity of the SSD several times will not suffice, as there are many factors that must be taken into consideration when attaining true ‘Steady State’.

‘Steady State’ can vary based upon the workload and the type of data access that is being placed upon the SSD. There is a profound difference between sequential and random data loadings, and also between various read/write mixed percentage workloads. The first requirement is to assure that 3 steps are taken to assure Steady State under very specific circumstances; Workload Independent Preconditioning (WIP), Workload Based Preconditioning (WBP), and steady state convergence verification. All measurements will be taken after WIP and WBP.

After each round of Preconditioning has been accomplished, there must be a logging of performance data to assure that Steady State has been achieved (Slope is less than 10% min/max). As our final round of testing, we will apply 20% over provisioning to the respective device to test performance. Over provisioning (OP) is the practice of leaving large amounts of unformatted spare area on the device to increase performance and endurance under very demanding workloads. The link above contains much more data on our testing procedures.

THERMAL CONSIDERATIONS

Intel specifies a 0-55 C operating temperature range for this SSD. During testing we were very careful to stay within the temperature guidelines and this was aided by testing on an open air rack, albeit with forced airflow directly over the SSD. A server with these SSDs will already have the large and loud fans for cooling that will be required.

The temperature monitoring can be handled through the Intel Data Center Tool, which is a command-line based system for management of the SSD. This tool also allows for monitoring of SMART data, which includes lifetime write monitoring of the device. This feature is important to determine the remaining lifetime for the device, a crucial requirement for enterprise applications.

Interestingly enough, we also used AIDA 64 to monitor the temperature data from the Intel 910. This illustrates that the driver is flexible enough to work with many commonly used tools.

We are able to test the performance of the SSD at both 400 and 800GB capacity with the single 800GB SSD that we were given by following configuration instructions provided by Intel. Unfortunately this does limit our testing of thermal performance somewhat, as there are four processors on this 800GB model. The 400GB model will only have two processors, so there will be a lower thermal envelope involved. Even when emulating the 400GB device by only using half of the 800GB capacity, the other NAND packages and the Intel processors are still there, generating heat, if only at idle. This makes accurate 400GB thermal monitoring impossible.

In the absence of 400GB thermal testing we will conduct two sets of testing, both for the 800GB SSD. One will be for the Default power setting which draws 25 Watts on average. The other set of testing will be for the Maximum Performance Mode, which draws an average of 28 Watts and peaks around 38 Watts. Testing was performed with 200 LFM (+/- 10%) for both settings. A word of caution: the Maximum Performance Mode requires 300 LFM (Linear Feet per Minute of airflow) for normal operation. The server environments that these SSDs are deployed into usually have high ambient temperatures, especially since lately data centers are using outside air for cooling to keep costs down. Testing in our lab with its low ambient temperature allowed us to test the performance mode with the same lower 200 LFM so that we can provide direct comparisons between the two settings with regards to the amount of heat produced. Workload testing was conducted at a QD of 128.

Testing was performed with 200 LFM (+/- 10%) for both settings. A word of caution: the Maximum Performance Mode requires 300 LFM (Linear Feet per Minute of airflow) for normal operation. The server environments that these SSDs are deployed into usually have high ambient temperatures, especially since lately data centers are using outside air for cooling to keep costs down. Testing in our lab with its low ambient temperature allowed us to test the performance mode with the same lower 200 LFM so that we can provide direct comparisons between the two settings with regards to the amount of heat produced. Workload testing was conducted at a QD of 128.

The results are recorded as a Temperature-Delta to ambient. This allows for any variations in room temperature. There is remarkably very little variation of the temperature that is produced by the Intel 910. As evidenced above, the maximum swing is a few points at most during the different workloads, including between the maximum and default settings. With an overall difference from default of only 3 Watts on average, it would be a safe bet to say that the Maximum Performance mode does not apply significantly more amounts of stress upon the server environment. For workloads that benefit, provided the host systems motherboard supports the extra wattage, the maximum performance mode is a great feature.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Simply Outstanding Review Paul!

Thanks, this one was my pleasure for sure. The 910 is one of the best SSDs that i have been lucky enough to test. Really shes a beast, just an awesome performer 🙂

When is this hitting the market? I can’t find it anywhere…

This looks like a dream product for VMware folks. Would it be possible to have PCI SSD reviews indicate whether the vendor supports multi-slot usage of the product?

This is going to ROCK with nexenta or any other ZFS product. 2 of those with 4 mirrored drives across those 2 cards…. cant wait enough to test it behind SVC as tier0

I just purchased the 800 GB 910 recently. I wish I would have read this article first because Intel’s documentation is abysmal on two important points (1) you cannot boot from the device, and (2) it appears as 4 devices to the system.

My application is a workstation and I would have liked to be able to boot the O/S from a single 800 GB drive in order to keep things simple. I would have preferred they implemented a hardware RAID controller.

Currently I have it configured in RAID 0 from Windows 7 and the first thing I did was put the paging file there (192 GB) which helps the overall system performance. Also, I have my RAM Disk backing store there so rebooting or shutting down the system is much faster now.

I am interested in tiered storage solutions – can anyone provide a references for something that might work?

Hey guys, what about security? Is there any way to have two of those cards mirrored? In case this card is used to store data files for e.g. an OLTP database it might dramatically increase database performance, but it NEEDS to be 100% bulletproof and data secure! Any ideas?

Yep, since it appears as 2 logical drives to the OS, you can do RAID 1 with the 400 GB (2 logical) and even RAID 5/6 with the 800 GB version (4 logical drives).

El mundo incrible