IOMETER 1.1.0-rRC1

We used IOMeter for our last test in order to cover all 4 corners one last time. Testing is set up with 8 workers, a 20GB file size, 64QD and normal cycling to run all selected targets for all workers.

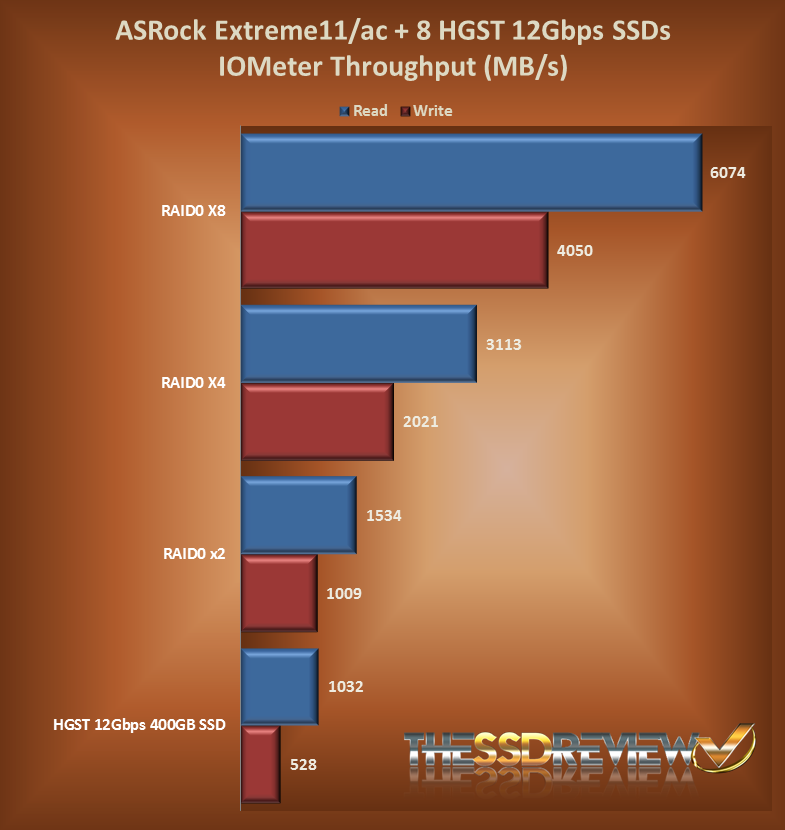

THROUGHPUT

All levels of testing were great but it was especially nice to finally see read performance at 6GB/s with write speeds at 4GB/s in RAID0 x8.

IOPS

Achieving 850, 677 IOPS was just icing on the cake in RAID0 x8, however, it definitely made us very envious of reaching that 1,000,000 IOPS plateau.

REPORT SUMMARY AND FINAL THOUGHTS

We don’t think that anyone really conceived of pushing 6GB/s transfer speeds and 850K IOPS through a consumer motherboard, but there you have it. The ASRock Extreme 11/ac is easily the most diverse consumer retail motherboard on the market when you consider that it has 6 Intel ports, 2 mSATA slots, 2 Thunderbolt x2 ports, 16 SAS/SATA ports supported by the LSI SAS 3008 controller with the LSI 3x24R expander, as well as a host of other features.

These include the ability to configure 4-way SLI/Crossfire setups through the 4 PCIe 3.0 x16 slots , 802.11ac wiFi for speeds up to 867Mbps, dual Intel Dual Gigabit LAN Ethernet, 4K compatibility, the ability to connect 3 monitors without the need of a Gfx card, as well as a Wi-SD box that contains 4 additional USB 3.0 ports, a SD card reader, and on and on so it seems. Perhaps ASRock was looking for a larger audience than we expected. Then again, perhaps they were just trying to make a point; a point that is very clear with 6GB/s transfer speeds and 850K IOPS in this motherboard.

There was also much to be learned by taking the time to test with different RAID capacities, while using our HGST Ultrastar 12Gbps SAS enterprise SSD for this task. Most importantly, we think the importance of using a variety of benchmark configurations, rather than relying on any single benchmark implementation, is paramount. In the past, we had often seen compatibility issues with one benchmark or the other, but never did it become so obvious as during this analysis. The typical benchmarks that we use in our day-to-day testing topped out at 4GB/s transfer speeds and 477K IOPS. They couldn’t stretch any further. Only through true-to-to life testing did we see first hand what this set up could do.

We threw 25.5GB of mixed movie files at the RAID0x8 configuration and it moved them from one place to another in 22 seconds, or just under one GB per second on average. Even more encouraging was the fact that, regardless of whether it was the single Ultrastar or RAID0 x2/4/8, the longest it took to move that folder was 1 minute and 16 seconds. We could only dream of this in real life.

Last but not least, if you think we plan to stop here, think again. We have 16 SATA 3 SSDs on their way to be tested on this board, hopefully in various RAID configurations, but most definitely in RAID0x16. For now though, our sincere thanks to both ASRock and HGST for making this report possible.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Hi,

We threw 25.5GB of mixed movie files at the RAID0x8 configuration and it moved them from one place to another in 22 seconds, less than a GB per second.

Do you mean more than a GB per second ?

Whatever it is some speed. What does one of these beasts cost?

We have seen them in the 5-600 mark and thanks…will fix.

How come you didnt run a Vantage?

We did run Vantage at all levels but there was no appreciable difference from our first result of 61124. The marks were 67724 (X2), 68851 (X4) and 68820 (X8). We felt including this served no purpose as Vantage feeds on new SSDs best, whereas these were definitely not anywhere new, and also, these are enterprise SSDs. Our main purpose in testing was seeing how high of an output we could reach from the motherboard.

cant really find an answer, is it possible just to make a 16 ssd raid 0 using these 16 slots? is this what you are going to test next? i am asking because it is mentioned up to 10 drives can be used in raid in asrock website.

Yes… Just because it’s an effective answer for much that we do but the truth is that we wanted to see how high we could get with ad many SSDS as possible to go along with an amazing build.

Can not wait for the 16x SSD review!

Currently using the LSI SAS 9300-8i with 8x SSDs.

were you able to get the 12 SSD’s connected and tested? Is that still forthcoming or did I miss it somewhere?

No. The company identified supply issues that we are still trying to overcome.

if you use all the ports on the LSI controller and run 4 way GPU, what will the PCI-e config will be ?

Is there any way to flash the LSI controller to allow raid 6?