MEASURING PERFORMANCE

Normally we give a boilerplate explanation of how we secure erase the drive and make sure it is in steady-state before we begin testing. Since the ALLONE drive is always in steady state, we just moved right into our performance testing.

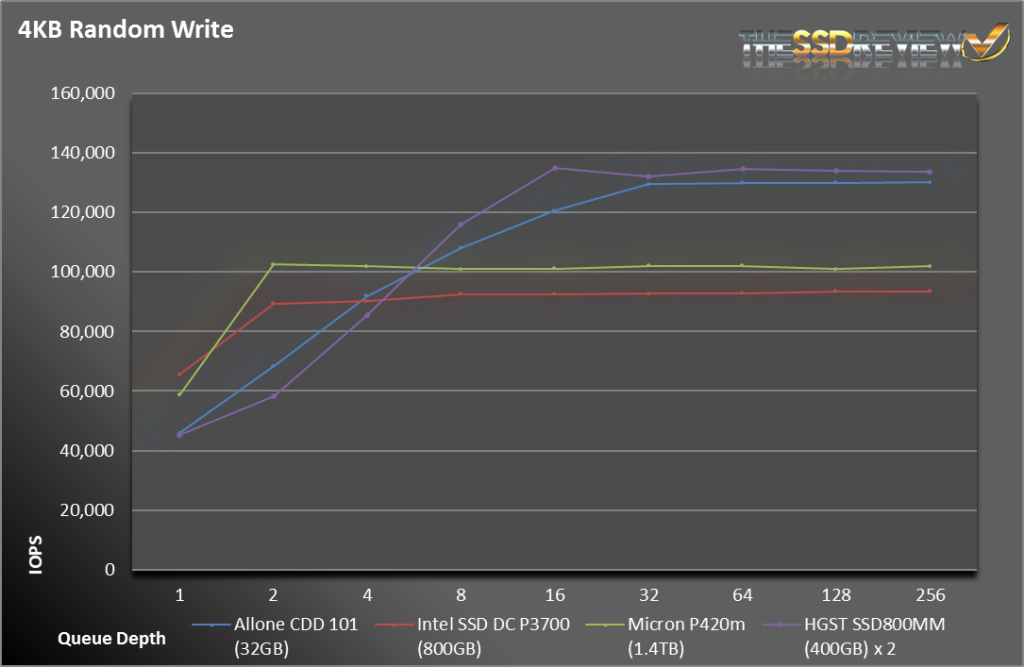

For random 4KB write operations, the ALLONE did a better job at staying with the pack. Once the queue depths were sufficiently high, it easily outpaced the Intel and Micron offerings.

Random 4KB reads were almost the complete opposite. At low queue depths, it was free and clear of the other drives, but stalled as queue depths rose. Once again, while the competition was putting up 300-750K IOPS, the ALLONE was stuck at 130K.

As we mentioned earlier, sequential performance is not the strong suit for the Cloud Disk Drive 101. While it was able to hang close with the Micron P420m for sequential writes, it was no match for the competition for the remaining tests. In fact, it was only 10-20% than most enterprise SATA SSDs. We are so used to PCIe-based storage products hitting 1 or 2 or 3GB/s in our sequential tests, that we were a little disappointed. Fortuantely for ALLONE, sequential operations are not the intended workloads for the Cloud Disk Drive 101.

At this point, we aren’t quite sure what to think about this drive. While it has some unique characteristics, it’s losing certain tasks by a very wide margin.

SNIA IOPS TESTING

The Storage Networking Industry Association has an entire industry accepted performance test specification for solid state storage devices. Some of the tests are complicated to perform, but they allow us to look at some important performance metrics in a standard, objective way.

SNIA’s Performance Test Specification (PTS) includes IOPS testing, but it is much more comprehensive than just running 4KB writes with IOMeter. SNIA testing is more like a marathon than a sprint. In total, there are 25 rounds of tests, each lasting 56 minutes. Each round consists of 8 different block sizes (512 bytes through 1MB) and 7 different access patterns (100% reads to 100% writes). After 25 rounds are finished (just a bit longer than 23 hours), we record the average performance of 4 rounds after we enter steady state.

- Preconditioning: 3x capacity fill with 128K sequential writes

- Each round is composed of .5K, 4K, 8K, 16K, 32K, 64K, 128K, and 1MB accesses

- Each access size is run at 100%, 95%, 65%, 50%, 35%, 5%, and 0% Read/Write Mixes, each for one minute.

- The test is composed of 25 rounds (one round takes 56 minutes, 25 rounds = 1,400 minutes)

Once again, we have to remind ourselves that we are not dealing with NAND storage. There are two things that stand out that make these graphs unique. The first is that, other than 512B, there is almost not difference between read, write and mixed workloads. Take another look at the bar chart, they are almost completely identical.

The second thing that jumped out as we reviewed our results is that the performance is the same, regardless of previous operations. We normally run our SNIA tests because it helps show how an SSD handles quick transitions between different workloads. Because of this, you sometimes see results that are lower than if you ran that individual test for long periods of time. With the ALLONE, much like rotating HDDs, the performance you get is completely independent from any previous operations. This is a trait that most SSDs would kill for.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

4? Random Reads @ QD1 reached 60KIOPS or am i starting to grow old ‘n’ losing my vision ?

How much for this?

ummm $15000

Oh wow, I thought it was only $6k. $15k for consumer grade ram, with consumer grade SD cards with gen 1 pci-e. Pass.

An overpriced solution in search of a problem. At 32GB about the only thing I could see it being used for is a high speed scratch area for database queries. Fortunately, I could just use a regular RAMdisk for that at almost no cost.

I expect to see these featured at overstock.com in the near future………

Read the results and realize where the limit is.

Most SSD are IOps limited, this thing is bandwidth limited. At QD32, it does not matter if you are doing 512B, 4k or 128k, multiply the Iops by the payload and you get the same limit (~600MB/s). They are limited by their PCIe gen1 x4.

Their RAM should be good for 32bits*4*1600Mbps=25.6GB/s.

If they can increase their RAM support (to 16GB or more SODIMM), have equivalent MicroSD (bunch of 16GB) and raise their bus to gen3 (4GB/s) or even better widen it to x8 or x16, then they may have an interesting product.

Until then, it will be *much* cheaper (and with better perfs) to dedicate some RAM for the same task…

I have no idea what this product is supposed to be used for. It’s pci-e 1x, no clue why, limits the bandwidth as you said. I’d like to see this with gen3 pciE and either a x8 or even x16 slot. If you need some low latency transactional ram, you purchase more ram. 16GB sticks are around 170 bucks for 1866mhz DDR3 ECC RAM, and around the 220 range for DDR4 2133. I can purchase a hell of a lot of ram before I’d use something like this, and even have so much money left over that I could put battery backups on top of my battery backups ensuring that the server doesn’t go down.

Additionally, this product seems about as enterprise grade as using a Samsung 840 Evo for your high-write database server. The fact that it uses 1600mhz commodity RAM with no ECC, commodity grade SD cards (That confuses me the most) and is priced at $6k mean that nobody will use this ever unless these are addressed.

Please stop making statements like “this is not an SSD”. It is! SSDs cover ALL solid state technologies for storage. While SSDs have become synonymous with flash storage in recent years, the two terms are not interchangeable. Enterprise storage companies rarely use the term SSD because it is non-descriptive of the underlying technology. They prefer to use terms like Enterprise Flash Drive (EFD).

With the performance of this, you’re better off getting 2011 platform (which supports 64GB of ram) ram disk software and a decent UPS. Its gonna end up much cheaper and faster.

with the storage you can install windows on it, while a ramdrive can’t do that ..;)

Still too much, if it was like 150-200 euros I might consider it

LOL 15K with that money I’d get ultradimm, SSD on ram slots.

So enterprise is willing to pay 15k USD for this, but apparently not the significantly less it’d cost to get 1TB of RAM in a machine and use a chunk of it as a RAM drive… Yeah right.

They have approximately the right market, but they have over priced an under-performing product. I agree with others that it needs at least PCIe 2.0 x8 and ECC DRAM to be worth half the asking price. But, I found this review because I do have need of a small, fast, infinite write, non-volatile storage for my mail server message queues. So, keep at it.

What a fucking ripoff!!!

What I want is a cheap unpopulated PCI card that will take all my old 256M, 512M, and 1GB DDR ram sticks and let me use it as a ramdisk for my swap file and temp folders. It’s not worth the effort to sell them and there are only so many keychains I need.