THE SNIA SPECIFICATION

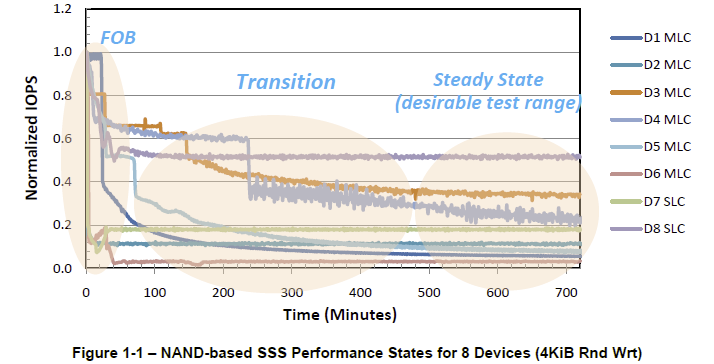

Unfortunately, the performance of SSS is so variable that the marketed performance numbers are usually only attainable when the device is in a fresh state and never used. This can lead to misleading marketing practices, as what you see is definitely NOT what you get after extended periods of use. The amount of misleading marketing in the industry, particularly on the client side (i.e. consumer) SSDs, can be a bit alarming.

Fortunately, the enterprise sector does not suffer from this plague of misleading information as much as the client side. This does go back to the fact that the manufacturers involved are dealing with much more sophisticated individuals in the enterprise sector.

Enter SNIA, the Storage Networking Industry Association which has collaborated with many industry leading companies and compiled a specification that allows for testing of SSS at Steady State conditions. The goal of this industry accepted norm for testing is that all SSS be measured with the same stick and under the same conditions. The purpose of this is to standardize performance measurement and dispel the majority of misleading performance claims.

Client and Enterprise Performance Test Specifications have been compiled and the links lead to the actual test specifications.

After initially having one approach (Enterprise) the tests have now been separated into consumer and Enterprise suites, and there is wisdom to this approach. The workloads for a typical SSS that the average Joe uses to browse the internet, and the SSS utilized in a torture scenario inside a server buried deep inside of a massive 11 football field sized data center, are going to be worlds apart.

SNIA CLIENT (CONSUMER) TESTING

Unfortunately this has led to a few issues. As with any specification, not everyone is going to agree to the conditions. There is also no way to force compliance for this type of testing. The results of SNIA testing for a consumer SSD are going to be much lower than the advertised specifications that are seen on your SSD packaging today, which creates quite the quandary for manufacturers.

After all as a consumer, are we going to choose a SSD capable of 500MB/s transfer speeds or one capable of 100MB/s, as determined by the SNIA spec, at the same price?

In the end, the manufacturer that posts Fresh Out of Box (FOB) results, which can be somewhat misleading, is going to outsell the manufacturer who posts realistic extended-use SNIA specifications. At the end of the day every single manufacturer out there has the same goal; to move units. Without a means of enforcing compliance to the SNIA specification, it is doubtful that they will ever be widely used for consumer purposes. At best, it could be included amongst the FOB results that we are so used to seeing, albeit below them.

Quite simply, companies have to compete and it is unfair of us to ask one company to be the one to step forward and publish only these specifications in the consumer market.

SNIA ENTERPRISE TESTING

The enterprise sector definitely has a much stronger chance of utilization of the SNIA specification to come to fruition, but there are drawbacks. One is the prohibitive costs of the equipment required for testing.

The product evaluation/review community, by and large, is very interested in the SNIA specification as a standard that can be used to test devices. We agree with the approach and the methodology behind SNIA testing, and believe that it would be wonderful to test using the approach with 100% compliance. For these purposes a dedicated piece of hardware would be required, of which only Calypso is now manufacturing.

As luck may have it, we met with SNIA and Calypso at Storage Visions 2012, and were quoted a ‘generous discount’ price of $27,500 for a Calypso testing platform. This is out of the question for most product evaluation websites, including The SSD Review, although we are always willing to sample, evaluate and retain a unit for further long term testing and analysis.

There is always the option of performing the tests manually, which would be extremely time consuming, if not impossible. It is feasible that some intense scripting can be done to automate the testing procedure and logging to be used without dedicated hardware, and SNIA has also mentioned this in their blogs. Unfortunately, SNIA has not released a version for users and it is not clear if there is any intention to do so. There are currently a few ‘grass-roots’ attempts that have been made, but these have not resulted in any tangible progress

There is always the option of performing the tests manually, which would be extremely time consuming, if not impossible. It is feasible that some intense scripting can be done to automate the testing procedure and logging to be used without dedicated hardware, and SNIA has also mentioned this in their blogs. Unfortunately, SNIA has not released a version for users and it is not clear if there is any intention to do so. There are currently a few ‘grass-roots’ attempts that have been made, but these have not resulted in any tangible progress

At the end of the day, we believe that unless SNIA, or a manufacturer, comes forth with a working piece of software that can be realistically utilized by independent third parties, such as product evaluation sites, widespread adoption of the specification will be hampered, if not impossible.

Independent third party verification of performance is the bread and butter of product evaluation sites, and one of the reasons that product evaluation sites attract hundreds of millions of viewers annually. I fear that if SNIA does not adopt an easily usable platform that is realistic in its usage requirements, the standard may never be dragged into the light of day. So……where does that leave us?

THE “SPIRIT” OF SNIA

What may seem to be a scathing view of SNIA really is not. The methodology and the concept behind their approach to testing is, in fact, very relevant. It is an excellent concept and standard, but for us it is out of reach. After intense study and weighing the feasibility of conducting our testing under SNIA specifications, even having discussed the issue with SNIA representatives directly, we have obviously come to the conclusion that full compliance is not possible.

What we can do is utilize the vast majority of useable tools that can help us to get these devices into a steady state condition, so we have set forth an approach that operates in the ‘spirit’ of SNIA.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Hi Paul,

You say ‘All tests must be ran consecutively, with no interruptions’ and I understand why as any pause would allow GC activity the opportunity to step back from the transition to steady state. How do you kick off the next test in a series in IOmeter? Are you doing this manually in the IOmeter control panel (as quickly as you can) or have you automated it in some way?

Regds, JR