RAID CONTROLLER CONFIGURATIONS

Finding the best settings for each card is a time consuming task as all controllers react differently depending upon firmware implementation. Both controllers utilized a 64k stripe size. Considerable optimizations can be made in regards to performance by varying the stripe size but there are simply too many configurations to test for the purposes of this report.

LSI 9265-8i: FastPath recommended settings of Write Through, No Read Ahead, and Direct I/O

Adaptec 6805: Dynamic mode, NCQ enabled, with Write Back caching enabled. Read Caching is also enabled.

As can be seen throughout the review, there are some large differences in performance and we did try every conceivable combination of settings in an attempt to boost the performance. In many cases there simply wasn’t enough of a difference in the respective tests to warrant changing the settings. We did utilize a wide range of settings with the 6805 in particular, in attempts to eke out more performance. In the end it was best to leave the controllers on settings that performed the best over a wide range of tests.

BENCHMARK SOFTWARE AND METHODOLOGY

BENCHMARK SOFTWARE AND METHODOLOGY

We will be using Iometer extensively for IOPS testing. Some of the metrics are hard to benchmark outside of real applications so there will be some charts with an explanation of real world results. As always, any and all configurations should be evaluated and administered by a storage professional, thus being optimized for the workloads involved.

For SSD testing we will be utilizing the WEI benchmark, Anvils Storage Utilities, AS SSD, PassMark, and of course our staple of testing, PCMark Vantage.

OF SPECIAL NOTE

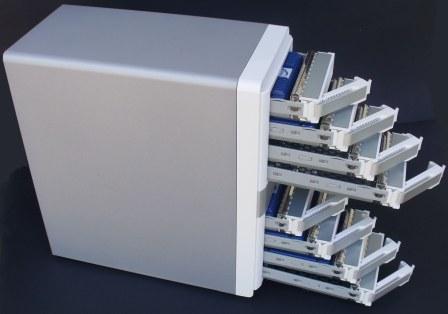

The Lightning LS 300S drives aren’t your standard equipment. In order to receive maximum performance with one controller the devices have to be configured in WidePort mode, which essentially uses two connections to one drive. It is, in essence, like having two devices connected in one. The SAS expander we are using does support two connections to each device through failover mode. The typical expander does not support the WidePort function in the capacity that the SanDisk uses it, so we sourced some special cables to enable us to get the job done. Using these cables we were able to connect to the rear of the expander, and use it as a pass through device to carry the data through the expander and into the RAID controller. This is where the ability to daisy-chain the ARC-4036 really came in handy!

Here is a ‘mock-up’ of how we ran the drives with the LSI controller. The top two SAS cables went to the RAID controller, and the bottom two cables were used to connect the SanDisk drives. The typical set of cables will allow four devices to be connected to each cable, but the special DualPort cables we used actually uses two ports per device, for a total of two drives per cable. In this configuration we can leverage both the awesome power of the SanDisk Lightning LS 300S drives and the Mercury Extreme Pro SSDs at will.

We did run into a compatibility issue here on this front with the Adaptec controller. The Adaptec does not support the Areca 4036 enclosure expander ports, which is curious. The Areca SAS expander module is the LSI 28-port LSISAS2x28 expander IC which is a very commonly used expander in a variety of devices. It is surprising, to be honest, that the Adaptec does not have support for this type of enclosure.

It is to be understood that of course the LSI card will work with its own expander IC, but one would expect that such a widely used IC would be supported by Adaptec as well.

To determine whether the incompatibility lies with the SanDisk EFDs or the Areca enclosure, we connected an array of standard SSDs to the rear ports of the enclosure. Unfortunately any devices connected to the expander ports were not recognized by the Adaptec controller. This could be seen as troubling if one were to consider the implications of incompatibility with widely used expander modules.

Queries with Adaptec Support regarding compatibility issues with this configuration were unfortunately not very fruitful. We were merely informed that this device was not compatible with the enclosure. There haven’t been any firmware upgrades of the Adaptec controller since April of this year, which is quite a bit of time considering the amount of new devices constantly coming into the marketplace.

A bit of an oversight there, but we did press on regardless.

A bit of an oversight there, but we did press on regardless.

The problem became our cables. Surely if one were to connect the SanDisk drives directly to the Adaptec controller we could use them for testing. The special dual port cables that we are using for testing utilize an 8088 MiniSAS connector, which is compatible with our enclosure. The connections on the Adaptec are the internal 8087 connectors, so we did source an adapter to allow us to move forward with a direct connection to the devices.

Using a 8087 to 8088 adapter we were able to connect the dual port sas cables with the MiniSAS cables to a pair of standard 8087-to-8087 cables. These cables were then attached to the Adaptec controller, and off we go!

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

I unfortunately have the Adaptec 6805 controller and… LOL… where is my garbage can…

I’m running a setup with 4x Kingston 240GB HyperX in R5, the ATTO results arent that shabby… I get 1.5GB/s write and 2.1GB/s read (seq) but…. the short random reads are just terrible… in Winsat it’s only 170 MB/s(!?!).

Funny thing is that when I turn off controller cache I get 9000 MB/s in sequential 64 read… 🙂

I dont however get more than 5ms max response time vs your 55ms value.

Winsat with all cache OFF (Swedish W7):

> Disk Sequential 64.0 Read 8922.99 MB/s 7.9

> Disk Random 16.0 Read 183.16 MB/s 7.6

> Responsiveness: Average IO Rate 1.01 ms/IO 7.9

> Responsiveness: Grouped IOs 7.94 units 7.5

> Responsiveness: Long IOs 1.98 units 7.9

> Responsiveness: Overall 15.69 units 7.9

> Responsiveness: PenaltyFactor 0.0

> Disk Sequential 64.0 Write 576.71 MB/s 7.9

> Genomsnittlig l„stid med sekventiella skriv†tg„rder0.494 ms 7.9

> Svarstid: 95:e percentilen 0.936 ms 7.9

> Svarstid: maximal 2.820 ms 7.9

> Genomsnittlig l„stid med slumpm„ssiga skrivningar0.542 ms 7.9

Winsat all cache ON:

> Disk Sequential 64.0 Read 1207.40 MB/s 7.9

> Disk Random 16.0 Read 170.22 MB/s 7.6

> Responsiveness: Average IO Rate 0.26 ms/IO 7.9

> Responsiveness: Grouped IOs 4.58 units 7.9

> Responsiveness: Long IOs 0.45 units 7.9

> Responsiveness: Overall 2.04 units 7.9

> Responsiveness: PenaltyFactor 0.0

> Disk Sequential 64.0 Write 882.76 MB/s 7.9

> Genomsnittlig l„stid med sekventiella skriv†tg„rder0.239 ms 7.9

> Svarstid: 95:e percentilen 1.013 ms 7.9

> Svarstid: maximal 5.359 ms 7.9

> Genomsnittlig l„stid med slumpm„ssiga skrivningar0.326 ms 7.9

Next steps to do:

PC –> Adaptec –> Garbage bin

LSI –> PC

Hahaaa… my 6805 beats the LSI in the AS SSD copy benchmark 2 of 3:

ISO 880 / 1.22

Program 377 / 3.73

Game 701 / 1.97

As an existing 6805 owner running four (4) Corsair 256GB (SATA-II) SSD’s (just have not upgraded to the SATA-III’s yet as my 2 year-old SSD’s are working so well); I was always interested in the LSI 9265-8i ever since you first published that article of the card with the eight (8) Patriot Wildfires. Wow! Did that ever open my eyes. I had just not been a huge fan of LSI products because we have that controller running (embedded) in all of our Dell PowerEdge Servers and I was just not over-impressed with that chip – and besides, I was an “old-school Adaptec fan-boy†as they’ve just been around so much longer.

However, that has all changed with this article and the prior one – Thanks Paul for an awesome review – as usual.

Once – and if – my existing Corsair SATA-II SSD’s ever die or crash, then I’ll be looking at upgrading my current workstation with the LSI 9265-8i (w/ FastPath); along with eight (8) OCZ Vertex 3 120-GB SATA-III SSD’s and I might even add in that ARC-4036! The entire cost of everything is nearly identical to a single OCZ 960GB PCI-e RevoDrive 3 x2 card and yet, I should be maxing out the LSI RAID cards full capability.

I’ve never “wished†for an existing SSD to die just so that I could “upgrade†– but if ever there was a time to crash and burn, it’s now baby! Problem is, these dang Corsairs are just rock-solid for over two years now . . . thus, I could be waiting for a while longer {: = (

Thanks guys for your feedback! It is nice to know that you guys are reading.

@bamer8906- I hope to see some of your results when you upgrade. I most certainly do not hope that you lose your devices, unless of course you have exhibited due diligence in regards to your data backup scheme 🙂

@Eero it would be helpful if you could post any of your testing results in our forum (as linked on the last page of the review).

This would allow us to discuss your testing and methodology in depth, so that we can get an accurate representation of your accomplishments.

Many things, such as motherboard, cpu, drives, stripe sizes, card settings, can come into play here, so we would really need a “big picture” of these types of parameters.

I think that the comments section of the article will not provide us enough room and space for this

Thanks guys for your feedback! It is nice to know that you guys are reading.

@bamer8906- I hope to see some of your results when you upgrade. I most certainly do not hope that you lose your devices, unless of course you have exhibited due diligence in regards to your data backup scheme 🙂

@Eero it would be helpful if you could post any of your testing results in our forum (as linked on the last page of the review).

This would allow us to discuss your testing and methodology in depth, so that we can get an accurate representation of your accomplishments.

Many things, such as motherboard, cpu, drives, stripe sizes, card settings, can come into play here, so we would really need a “big picture” of these types of parameters.

I think that the comments section of the article will not provide us enough room and space for this.

What about the 9260 Lsi controller? Thats a more apples to apples comparison?

these are comparisons of the top-tier offerings from each manufacturer at the time of publication.

Thanks guys for your feedback! It is nice to know that you guys are reading.

@bamer8906- I hope to see some of your results when you upgrade. I most certainly do not hope that you lose your devices, unless of course you have exhibited due diligence in regards to your data backup scheme 🙂

@Eero it would be helpful if you could post any of your testing results in our forum (as linked on the last page of the review).

This would allow us to discuss your testing and methodology in depth, so that we can get an accurate representation of your accomplishments.

Many things, such as motherboard, cpu, drives, stripe sizes, card settings, can come into play here, so we would really need a “big picture” of these types of parameters.

I think that the comments section of the article will not provide us enough room and space for this.

Your information is either out-dated, or flat out biased. Why should anyone believe anything on your site when you have a huge LSI banner on every page? Have some intelligence. The 4K stripe is the worst Adaptec setting…yet, you focus on that (proving my point). Even basic info like packaging and pricing of the SSD caching solution from Adaptec (which btw, kicks LSI’s butt in all “real world” testing I’ve done)…its not a SW + SSD solution, its SW only, you can use any SSD vendor you want, and its approx a $250 uplift in price over the card…not $1000+ as you say. Anyway, be smart…test in your own environment and decide.

We particularly enjoy posts like this because it offers us the opportunity to invite the comments author to submit any test results that he has done or knows of. The simple fact is that advertising cannot ‘bend’ actual tests in any way shape or form so the comment is invalid. As the Editor, I particularly pay close attention to such and can also clearly point out that the author is an ‘Independent Evaluator’ and not staff of the site. He derives no income whatsoever through this. Looking forward to your return!

word of clarification- All tests were conducted with a 64k strip size. This is noted several times in the review.

Adjustments can be made to the strip size to favor sequential (larger) or random (smaller) access. This will also only further optimize the other controller in the testing scenario. Any gains will be felt equally (or close to) by both controllers. Also, for the purposes of the review, this would lead to many many more testing results. As stated in the review, we are not testing any and all scenarios, just what we felt would be an honest ‘baseline’ of overall performance.

We used 64k as a middle ground. This is a common size for arrays that lends itself to both random and sequential speed.

Any and all information regarding the MaxIQ caching of the Adaptec controller would be appreciated. The research that I conducted, and the information readily available on Adaptecs’ site, all pointed towards branded SSDs being used in conjunction with their SSD caching. If there is in fact an error with the information provided, I will amend the article to state the correct information. I am not trying to post inaccurate information, and apologize if that has occurred. I have sent an email personally to “Fbomb” asking for further verification of the issue, and if there is such provided, by any source, the information will be corrected.

Note that never were any implications of Adaptec SSD caching performance in comparison to LSIs caching performance, merely mention of the competing solutions. We have in fact reached out to Adaptec several times to conduct testing of their caching solution, but as of this time we have not received a reply. I was intending to do a head-to-head test of LSI and Adaptecs caching solutions as part of our CacheCade Pro 2.0 review. The offer still stands for us to test the Adaptec solution.

Finally, I receive no financial gain from this website. It is owned wholly by Les Tokar, and I receive no financial compensation. The results speak for themselves, and I stand by them. Feel free to post your results in our forum, the link is on the last page of the review. Any requests for specific testing will be accommodated, as long as we have the hardware to provide them.

I would be interested in seeing some of your MaxIQ results!

I do not think that it is fair to change the default stripe size of the Adaptec to match the one from LSI. As you can see in the review from Tom’s Hardware, the Adaptec performance close to the LSI when run with thei default stripe size. Also I have benchmarked both with 16 SAS HDDs (I am not yet using SSDs in RAIDs because of reliability issues and high price) and found that the Adpatec beats LSIs cards quite often in application benchmarks. No doubt would I use the LSI card if I would want to squeeze performance out of SSDs but I would not want to pay the premium for HDD based storage nor would I want to abstain of the flash based cache protection (too many problems with batteries in the past).

This review was configured with the absolute best possible stripe selected for the total hardware configuration. Simply put, a different stripe would not have shown more favorable for either card. Trying to base comparison between two RAID card reviews, one which utilizes hard drives and the other SSDs, is truly like comparing a circle to a square. The two reviews cannot be compared simply because the stripe is completely different for an SSD than it is for a hard drive. It has nothing to do with squeezing the performance, but rather, enabling the performance of both cards to their fullest extent.

I think this review is a bit unfair. Ok they were tested at default settings each, but if Adaptec was tested with all cache enabled (and obviously the random works is affected specially read ahead), why didn’t test LSI too with all cache enable?

Word of Clarification-

Toms conducted their tests at 64QD as well. If you look to the page of that review that shows the configuration screens, you can see their configuration in their own screenshot. They did not disclose their testing parameters, but they did provide a screenshot. 64K is a very common stripe size used in testing and evaluation of RAID controllers.

A larger stripe size on the Adaptec would paint a prettier picture for sequential throughput, but it would reduce the random performance by much larger magnitudes. In other types of testing, the controller would look even worse.

So, you have tradeoffs. For both of them.

In order for Fastpath to be enabled, one must use the write through feature. If Write Through were used with the Adaptec, the divide would have been much greater.

The settings that were used were by far the best for the Adaptec.

We conducted a long series of tests to determine the best settings, every single variation was tested.

Each controller needed to be tested to its best potential over a large range of tests.

In simple terms: there is no ‘magic’ setting for the Adaptec controller that is suddenly going to make it a racehorse.

I spent a large amount of time testing this controller with HDDs, SSDs, and EFDs. I also tested all configurations that were possible in order to try to paint the Adaptec in the best light possible.

If one were to read other results in other reviews with the Adaptec, they would see similar results.

Unfortunately, those other reviews are actually unfair to the LSI controller. Sounds weird, right? Not really. They simply do not have the devices to push the LSI to its full potential. Being a SSD specific review site, we are lucky enough to have the gear that others simply do not.

If they were to have the gear to push this hardware to its true potential, you would see the results just as plainly there as you do here.

I understood that there was going to be discord and disagreement when i posted this review, and I do appreciate the discussion.

Whenever there is such a big difference in performance there will always be questions that will be asked, but for clarification of the results, all one has to do is simply look at the other reviews of that controller and their publicly posted specifications.

The true performance of the LSI is the real story here, in other reviews no one else (that i am aware of) outside of professional validation companies (Oakgate, etc) have pushed these to their full potential and posted the results.

I encourage discussion of the testing, but would recommend that we take it to our forums. There is a link on the final page of the review.

Very well stated. Thanks Boss!

An interesting read, n comments, thank you all .

Greetings Paul and friends,

I’m new to this forum, so a short honeymoon will be most appreciated.

I for one would like to see a cost break-out for each of the discrete components you discussed in this excellent review e.g. 5 x Sandisk Enterprise SSDs @ $6,800 = $34,000 + ?? The article begins by stating that the total was $45,000, so a breakout of the remaining components would be nice to add here i.e. controllers, additional cables, external enclosure etc.

FYI: my peculiar interest in solid-state storage is more inclined to explore reasonable alternatives for small office/home office (“SOHO”) users and singular enthusiasts who crave the “most bang for the buck”. Clearly, $45,000 is far beyond the individual budgets of these often overlapping market sectors, as are the Sandisk “Enterprise” drives you reviewed.

Also, a mere 5% improvement in the performance of workstations can accumulate significant benefits for small- to medium-sized organizations that employ expensive professionals.

I honestly believe that the IT industry can do a much better job of accelerating storage across the board for the SOHO sector, as opposed to “holding back” and requiring that sector to accept the “trickle-down” effect:

https://www.supremelaw.org/systems/superspeed/RamDiskPlus.Review.htm

Several questions which I have asked of vendors like Intel and such, still remain unanswered e.g.:

(1) does TRIM work with an OS “software” RAID (i.e. where partitions are initialized as “dynamic” and either XP or Windows 7 manages RAID 0 logic)? Even though a Windows OS cannot be installed on such a RAID array, it offers a very cost-effective way to increase the performance of dedicated data partitions;

(2) is TRIM being enabled for native RAID arrays on future Intel chipsets?

(3) is it true that each SSD in a 2-member RAID 0 array will experience 50% as many WRITEs over time and thus the write endurance of each should double, and each SSD in a 4-member RAID 0 array will experience 25% as many WRITEs over time and thus the write endurance of each should quadruple?

When I tried to encourage comments concerning the effect of RAID 0 on write endurance of Nand Flash SSDs at another Internet Forum, my comments were promptly censored without any explanation(s) whatsoever. Did I touch a nerve, and if so, why?

I believe question (3) above can and should be answered definitively with proper scientific experimentation e.g. by charting Media Wear Indicators at one end of the cables, and I/O requests at the other end of the cables (cf. Windows Task Manager and the extra “I/O” columns that can be added under the “Processes” tab).

Similarly, if you read the list of “Known Issues” in the documentation for Intel’s latest Solid-State Drive Toolbox (October 2011), there you will find a rather daunting list of obstacles for any SOHO system builder or administrator (Pages 4 thru 6).

I think the SSD industry would do well to strive for the kind of Plug-and-Play compatibility that is now available with most modern rotating platter drives: I can plug a modern WDC SATA HDD into just about any motherboard or add-on controller, and it’s off and running.

Thank you very much and … KEEP UP THE GOOD WORK!

MRFS

Good day MRFS… Its good to see someone so dedicated in their thoughts and questions and, in order to facilitate the discussion, can I ask that we carry it to the forums? Start a forum thread and I believe that this will be an excellent topic of conversation.

Thanks ahead

Les

Thanks for the testing. Very imformative.

Were are in the process of testing both cards ourselves but it appears the Adaptec 6805 doesn’t support the ARC-8026 SAS expander (based on the LSI SAS2x36/28 expander) – so it’s out of the running.

You also mentioned the 9265-8i can support 240 devices. I think you may have that confused with the 9285-8e at 240 devices while the 9265-8i supports only 128 (atleast after talking directly to LSI for confirmation). That is the only difference between the models other than the 9285-8e have sff8080 external and 9265-8i having sff8097 internal.

There is also some confusing documentation that says CacheCade 2.0 Pro feature (not out yet) will only be available on the 9265-8i but one would suspect it would be available for the 9285-8e. If you have any contacts at LSI that could clear that up, that would be good to know.

thanks for noticing the inaccuracy, we have amended the article to make the correction. I will see what controllers the next version of cachecade will support. We have been assured of a testing opportunity with the next version as soon as it is ready, so stay tuned!

Costly, but now even more glad I invested in the 9265-8i in the summer of 2011. I’m now getting great speeds with 7 1TB 7200rpm drives in a RAID5, speeds of roughly 2000 MB/sec writes and 1850 MB/sec reads, posted here at:

https://tinkertry.com/webbios

I’m looking forward to playing with CacheCade 2.0 next near using a SATA3 SSD for my virtualization/backup server, complete parts list at:

https://tinkertry.com/vzilla

and method to reliably get into WebBIOS with Ctrl+H on an ASRock Z68 motherboard:

https://tinkertry.com/webbios

So glad thessdreview.com site exists, very useful info, I love to learn!

I might believe your results if you actually took the trouble to get your b****dy apostrophes correct.

I also want to chime in and state that we’ve put these cards back to back and found that the Adaptec performs far better than this review would suggest. Of course, we were using tens of 2TB drives, rather than SSDs (as well as cachecade 2.0 read/write vs MaxCache 2.0 read/write), and a 6445Q card that an Adaptec rep custom made for us (but is not really any different than the 6805Q shipping now. We’ve been exclusively LSI, with several hundred cards employed, but are designing new systems and considering a switch.

Granted, we run Linux, and driver differences can be impressive. It’s actually a shame that the tests don’t try Linux as well, since I’d wager a bet that most of these cards will end up in enterprise systems. It could be that Adaptec focuses on Linux drivers (or their Linux driver devs are better), and the performance is much better there. We won’t know though until someone does a back to back. I can understand not testing every possible config, but not testing a product on the platform it’s very likely to be used on (50/50 at least) seems like a missed opportunity.

As for our tests, as can be seen in the sequential tests in this benchmark, we actually found that the LSI card had problems sustaining heavy sequential writes (notice the huge spikes). In our tests, we exported several Fibre Channel luns from identical servers, one with an 9265, one with the 6445Q, and then on the FC client we did a RAID 1 between them and watched the IO to ensure it was the same on both sides. The server with the LSI card suffered from a dirty memory backup, to the point that the system became unresponsive in IO wait, while the one with the Adaptec card streamed the writes effortlessly. We then swapped cards between systems and the problem followed the LSI card. It just didn’t seem to be able to sustain continued heavy writes. It was so bad that I was forced to reduce the benchmark time from 5 minutes of sustained sequential to 3 minutes, because the LSI system would die before the test completed.

We otherwise didn’t see much performance variance at all between the 9265 and 6445Q, each with 20-2TB disks and two 240GB SSDs as cache. I will give you that you’re not going to notice that you have 400,000 iops of performance on 20 platters, but from other comments I gather that people are seeing better performance than the review indicates. I’m glad I found the review, but in the end it just raises more questions for me.

One thing I do like about the Adaptec MaxCache 2.0 is that it allows you to adjust the algorithm used to determine ‘hot’ data, as well as adjust read/write percentage of SSD. The LSI CacheCade 2.0 was just ‘on’ or ‘off’.

I do not see any mention of TRIM support in either of these devices, which is a very important concern with SSD usage over time. Testing shows that without TRIM support, SSDs can actually lose enough performance to dip below raid 10 arrays of spinning drives after the SSD has been fully populated and starts needing to use ERASE to clear out space for new data. Do either of these devices support trim?

There is no trim support on raid controllers. You need to have a physical connection to the OS for that.

The 9265 is great but be warned: it’s picky about motherboards. I had massive problems trying to get it to work on an Asus Rampage III Formula motherboard with an i7-990x micro. I finally had to switch over to a Supermicro X8SAX. The Supermicro was one of the few approved motherboards that supports an i7 micro. LSI doesn’t even want to talk to you if you attempt to use their card with a non-approved motherboard.

DrLechter, I’ve had a good working relationship with LSI support, with phone and email options all listed here:

https://www.lsi.com/about/contact/pages/support.aspx

I’m using a Z68 motherboard that isn’t on LSI’s list, but we got it working just fine, and LSI even points to the way I figured out to reliably get into MegaRAID WebBIOS:

https://tinkertry.com/lsi-knowledgebase-article-points-tinkertry-method-configure-lsi-raid-z68-motherboard/

Wish I had figured that out before returning my MSI motherboard, since the similar WebBIOS technique probably would have worked there too.

That said, I’m not saying LSI support could do anything about some fundamental hardware incompatibility. I’m just saying that LSI did very much talk to me, giving me reasonable support on minor issues I had, as an individual end-user of a personally purchased, 9260-8i.

Later on, I also purchased a 9265-8i and became a blogger mid 2011, and wrote about how to install drivers and get basic hardware monitoring working in the new ESXi 5.0 release, which had 9260 drivers built in, but things were more complex on the 9265, discussed here (and since fixed with ESXi Update Rollup 1):

https://tinkertry.com/lsi92658iesxi5

Most recently, recorded some video of me “kicking the tires” of an LSI based RAID5 array here:

https://tinkertry.com/raidabuse

And eagerly awaiting CacheCade Pro 2.0 for some SSD caching fun!

I make no claims to be anywhere near as technical as this great site, with far more benchmarking patience than I’ll ever have!

Very nice reply and I have been to your site reading of your testing prior. Personally, I think you might get significantly more attention here eheh

do you keep on adding SSDs all they way up to 256 or you hit a performance bottleneck along the way. Can you do a benchmark with 2,4,8,16 SSDs to check if the performance improvement is linear or not.

so it’s no way close to saturating the x8 bus.

The “x8 bus” speed varies, depending on which PCI-E version has been implemented. PCI-E 2.0 oscillates at 5 GHz and uses the legacy 8b/10b frame (10 bits per byte), for a raw bandwidth of 500 MBps per x1 lane. Thus, x8 PCI-E 2.0 lanes @ 500 MBps = 4.0 GBps raw bandwidth. The PCI-E 3.0 spec increases the clock to 8 GHz and upgrades the frame to 128b/130b “jumbo frame”, for a raw bandwidth of 1.0 GBps per x1 lane. Thus, x8 PCI-E 3.0 lanes @ 1.0 GBps = 8.0 GBps. Assuming a rough average of 500 MBps per 6G SSD, then 16 such SSDs would be needed to come close to saturating x8 lanes of the PCI-E 3.0 bus. 32 such SSDs would be needed to saturate x16 lanes e.g. RAID controller with an x16 edge connector.

can you add highpoint’s high end card to the mix as well

Why did you show RAID5 read tests? There’s no penalty for reads in RAID5. Show us RAID5 write tests. That will show the true speed of the controller. Also, why no RAID10 comparisons?

How come the 6805 performs better at Raid 5 with traditional harddrives than the 9265? Shouldn’t the fastest card be fastest at raid 5? Is it the firmware?

Thanks.

I was considering using an adaptec 6805 with 4 intel ssd 330 180 gb in raid 10. I keep wondering what will happen over the long run since there is no trim. How would the drives ever optimize.

I can’t believe there’s no mention of TRIM. This article is a waste of time for anyone planning to use SSDs in RAID long term.

Isn’t the speed of the Adaptec a bit slow? Makes me wonder why that is? perhaps because of the cables? If it were 10% slower of even 20% slow, OK, but I cannot deny that half the speed, something must be wrong with it…

I know this article is old but I’m in the market for a good RAID card si I though I’d share the following info for whoever else is (I’m surprised at the lack of recent RAID controller reviews … I can only find old ones).

Although the credibility of this article is hindered by the abundance of LSI banners on the website and by the over the top and obvious ‘LSI-fanboy’ tone of the author (more professionalism would have helped), the numbers are actually not unexpected and I’m surprised nobody mentioned this: the Adaptec 6805 specs themselves mention max 50K IOPS. That’s about it … it’s a limitation of the controller and slow RAM. So it’s not surprising at all that it can’t reach the impressive numbers that the LSI 9265 did.

However, the Adaptec 6805 is not in the same class as the LSI 9265. A better comparison nowadays would be against between the LSI 9271 (same RoC, same RAM, but PCI-e 3.0) and the Adaptec 7805 (much improved RoC, DDR3 RAM and PCI-e 3.0, just like the LSI 9271). Adaptec advertises the 7805 for more than 530K IOPS. Prices are comparable.

I’d be very interested to see a review of the two.