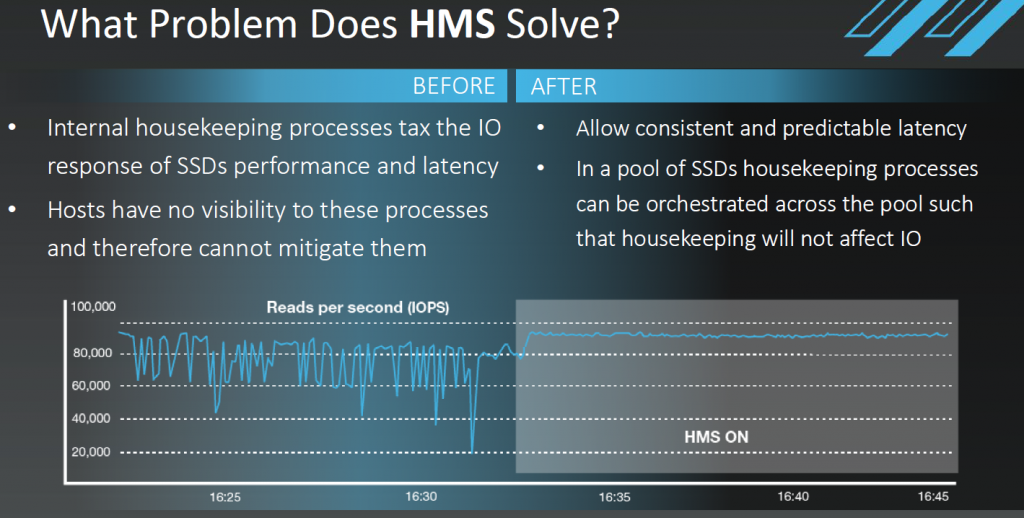

Today, OCZ is introducing HMS technology in their Saber 1000 2.5” SATA 6Gb/s SSDs. What is HMS? HMS or Host Managed SSD technology is a new frontier for SSDs and the initiative is referred to as Storage Intelligence. HMS functionality is now defined in the T10 Technical Committee and is in the ratification process as part of the T13 Technical Committee AT Attachment. This technology allows for the host to coordinate garbage collection, log dumps, and drive geometry data to improve overall storage performance, and specifically, enable consistent and predictable latency across a large pool of SSDs.

Hyperscale, Cloud, and datacenters now run software defined storage applications which manage large pools of SSDs across multiple servers. Consistent performance of these SSDs is critical to many modern applications, such as modern data bases and online trading, that have migrated to the Cloud. Exposing HMS functions via API enables integration of HMS functions into the storage stacks.

“We have listened to our customers and they require not only high performance, but consistent high performance,” said Oded Ilan, General Manager of OCZ’s R&D Team in Israel. “Our new Saber HMS SSD, together with a software library and API, enable for the first time software orchestration of internal housekeeping tasks across large pools of SSDs, thus overcoming performance barriers that were simply not possible to address without this technology.”

The HMS-enabled Saber 1000 SSDs are managed by software APIs which provide control over the HMS functionality within the Saber HMS SSD and allow IT administrators to create multiple volumes and intelligently aggregate the pool of SSDs. Due to inherent issues of static virtualization in RAID, the inability to balance real world workloads between multiple SSDs, OCZ implements dynamic virtualization. Dynamic virtualization allows every volume address to be stored on any physical address, and this mapping may change over time. Though it is more complex than static virtualization, it enables greater flexibility and provides a more efficient infrastructure for implementing many more storage services such as thin provisioning, de-duplication, storage tiering, and more.

In a typical HMS implementation SSDs would be divided into three groups. One group in Maintenance Mode while the other two are in Active Mode (customers can take this and modify to their implementations as needed).

In Maintenance Mode each device in the group typically performs maintenance operations at the device level (such as garbage collection, log dumps, etc.), however, the group can also perform maintenance operations at the group level (such as data balancing, storage tiering, etc.). During maintenance mode, the host should not send incoming read or write commands to any of the devices within the group.

On the other hand, during Active Mode each device in the group serves incoming read and write commands from the host without delays or any stalling. The GC and Log Dump operations are suspended in this mode as the host must validate that each device will not receive any incoming write commands without having enough free pages to serve it.

Then when time comes, each group transitions or changes its order phase in a round robin manner. Assuming three groups (G) of drives, G2 will enter maintenance mode (while G1 and G3 are in active mode), then G3 will enter maintenance mode (while G1 and G2 are in active mode), and so on. No group does both at the same time, this is the key to providing optimal performance. The controller doesn’t need to balance things out.

The Saber 1000 SATA Series is OCZ’s first product to support HMS controls and includes usable capacities of 480GB and 960GB in a 2.5” x 7mm enclosure. Designed for cost-sensitive, read-intensive, large-scale deployments, Saber 1000 HMS delivers the consistent performance datacenters require, at a price point that enables superior ROI. Sequential speeds are rated for up to 550MB/s read and 475MB/s write and they are rated for up to 91K/22K read/write IOPS.

The Saber 1000 SATA Series is OCZ’s first product to support HMS controls and includes usable capacities of 480GB and 960GB in a 2.5” x 7mm enclosure. Designed for cost-sensitive, read-intensive, large-scale deployments, Saber 1000 HMS delivers the consistent performance datacenters require, at a price point that enables superior ROI. Sequential speeds are rated for up to 550MB/s read and 475MB/s write and they are rated for up to 91K/22K read/write IOPS.

OCZ is available for supporting volume development partners, and Saber 1000 HMS will be available through normal sales channels in bulk. These SSDs are covered by a 5-year warranty or until the average P/E count across the SSD is used up, which is 3,000 P/E cycles for these models. The drives are in the final stages of firmware validation now and should be available in November. As of today, however, all documentation and downloads are available on OCZ’s website. Finally, in terms of pricing, MSRPs are $370 (480GB) and $713 (960GB) for both the base Saber 1000 and Saber 1000 HMS models. These prices will vary greatly depending on the size of the order however.

OCZ is also providing:

- A reference design and demonstration platform which demonstrates the functionality of Saber HMS, and enables benchmarking the HMS system performance in real time.

- A software library and a Programmer’s Guide so HMS can be easily integrated into storage stacks of storage OEMs or software defined storage applications.

It is an interesting move as you are essentially getting this new technology for free. Really it is almost as if they are selling the new idea rather than the SSD itself. This is because OCZ is really pushing for a broad market approach in hope that this new technology will be widely accepted.

The implementation out now is only the beginning. HMS as it stands essentially starts, stops, or pauses garbage collection, shows the device’s geometry down to the page and block, and start and stop metadata log dumps. OCZ is hoping that once more people get ahold of the software source code that they will start revealing new ways in which it can be used to optimize flash storage. OCZ and Toshiba already have plans to deliver more extensive features in their next implementations of HMS SSDs as well. With an ever-increasing focus on higher performance and lower cost, OCZ views HMS as the next evolution in storage systems design.

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

The SSD Review The Worlds Dedicated SSD Education and Review Resource |

Would the HMS make GC possible with RAID5 ?

Garbage collection already takes place regardless if the drives are in a RAID array or not. HMS will simply allow the user to manually start, stop, or pause GC based on the configuration and usage of the storage array.

Did you mean TRIM?